Improving the Naturalness of Synthesized Spectrograms for TTS Using GAN-Based Post-Processing

This is the accompanying website for the following paper:

- Paolo Sani, Judith Bauer, Frank Zalkow, Emanuël A. P. Habets, and Christian Dittmar

Improving the Naturalness of Synthesized Spectrograms for TTS Using GAN-Based Post-Processing

In Proceedings of the ITG Conference on Speech Communication: 270–274, 2023. PDF Details DOI@inproceedings{SaniBZHD23_Postprocessing_ITG, author = {Paolo Sani and Judith Bauer and Frank Zalkow and Emanu{\"e}l A.\ P.\ Habets and Christian Dittmar}, title = {Improving the Naturalness of Synthesized Spectrograms for {TTS} Using {GAN}-Based Post-Processing}, booktitle = {Proceedings of the {ITG} Conference on Speech Communication}, address = {Aachen, Germany}, year = {2023}, pages = {270--274}, doi = {10.30420/456164053}, url-pdf = {https://ieeexplore.ieee.org/document/10363041}, url-details = {https://www.audiolabs-erlangen.de/resources/NLUI/2023-ITG-postprocessing}, }

Abstract

Recent text-to-speech (TTS) architectures usually synthesize speech in two stages. Firstly, an acoustic model predicts a compressed spectrogram from text input. Secondly, a neural vocoder converts the spectrogram into a time-domain audio signal. However, the synthesized spectrograms often substantially differ from real-world spectrograms. In particular, they miss fine-grained details, which is referred to as the “over-smoothing effect.” Consequently, the audio signals generated by the vocoder may contain audible artifacts. We propose a spectrogram post-processing model based on generative adversarial networks (GANs) to improve the naturalness of synthesized spectrograms. In our experiments, we use acoustic models of varying quality (yielding different degrees of artifacts) and conduct listening tests, which show that our approach can substantially improve the naturalness of synthesized spectrograms. This improvement is especially significant for highly degraded spectrograms, which miss fine-grained details or harmonic content.

Audio Samples

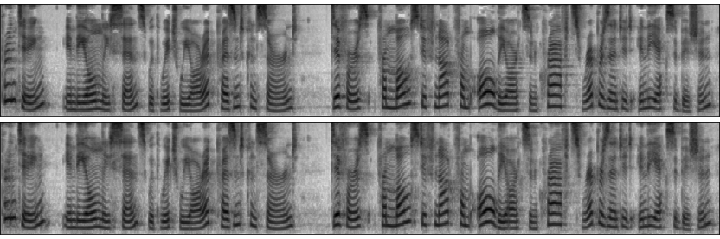

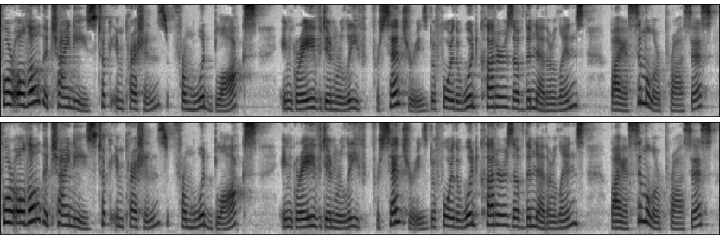

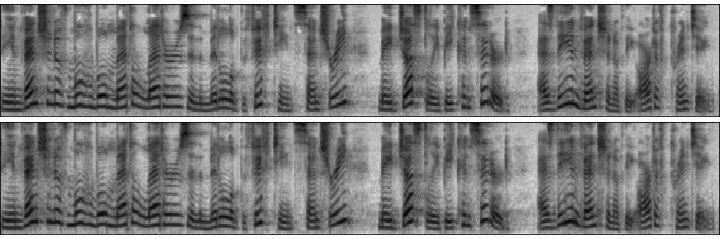

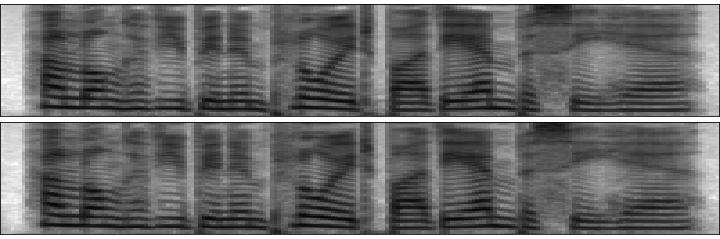

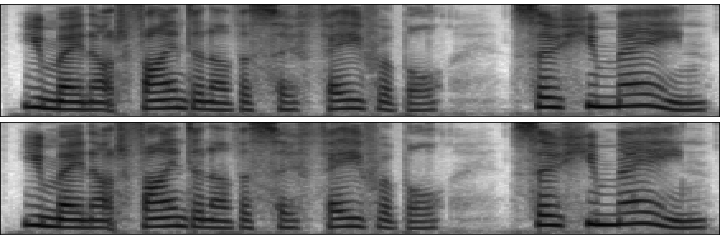

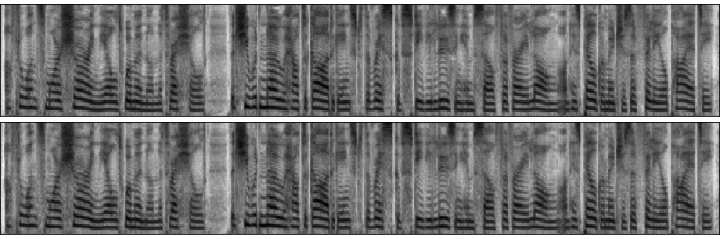

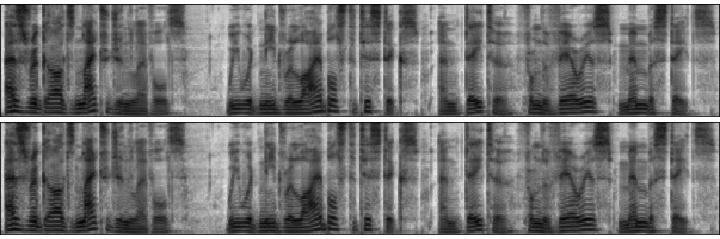

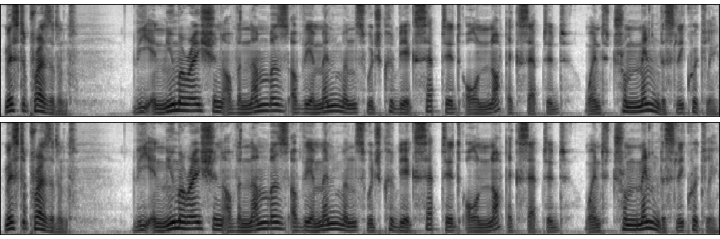

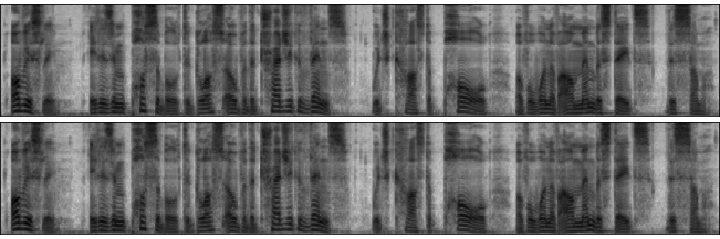

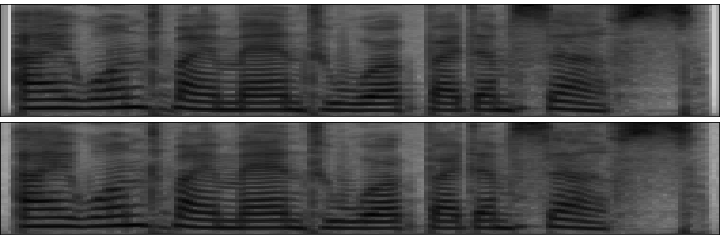

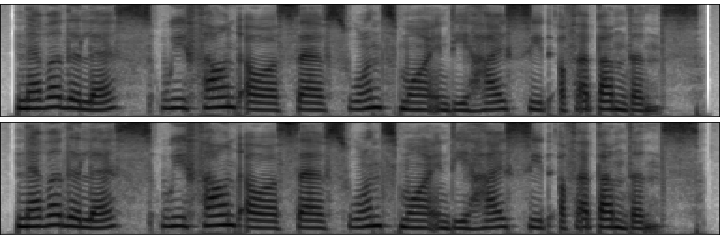

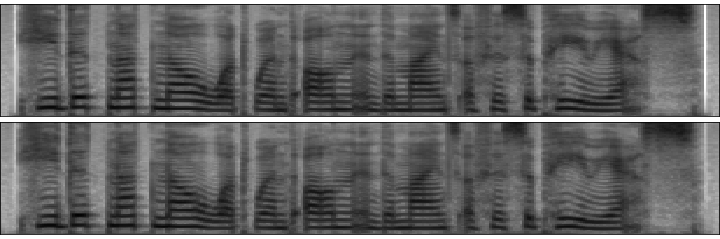

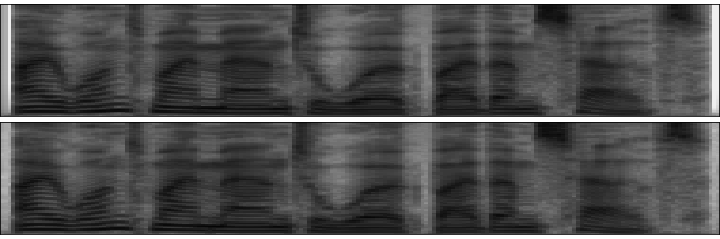

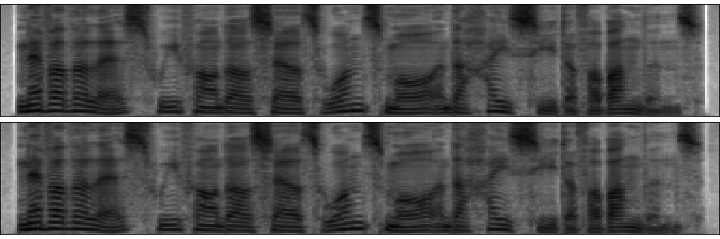

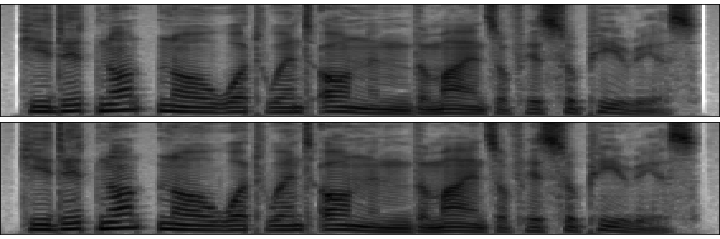

Here, we provide audio samples to illustrate the results of our paper. For a given ground-truth audio sample from the test set, we provide a reference obtained by copy synthesis (REF), the vocoded output from the acoustic model (RAW), and the vocoded result of our GAN-based post-processing model (GAN). We show the results for the four acoustic model versions considered in the paper, trained for 1k, 5k, 10k, and 300k iterations, respectively.

The audio samples are presented in an interactive web audio player, where you see two mel spectrograms along with the different audio conditions. The upper visualization always refers to the ground-truth spectrogram (obtained from the real recording, unlike REF obtained by copy synthesis) and the lower visualization refers to the respective audio condition that is currently selected.

For the demonstration purpose of this website, we selected three samples for each speaker of our multi-speaker TTS system. The table below lists the datasets that are the basis for the TTS system's speakers. Clicking on a dataset leads to the respective audio samples.

LJ Speech 1.1

Printing, in the only sense with which we are at present concerned, differs from most if not from all the arts and crafts represented in the Exhibition

For although the Chinese took impressions from wood blocks engraved in relief for centuries before the woodcutters of the Netherlands, by a similar process

the invention of movable metal letters in the middle of the fifteenth century may justly be considered as the invention of the art of printing.

Hi-Fi TTS (speaker ID 92 only)

"How long have you been employed by the Embassy here?"

You may not find another so favourable, so humane.

After once more assuring the old woman on the threshold that she would know how to guard against the risk of Stevie losing himself for very long on his pilgrimages of filial piety,

TC-STAR

As regards nitrogen levels, we would need reliable statistics and data from the various Member States.

Mister President, the enumeration of the numbers of the amendments which could be accepted or not accepted, went tremendously quickly.

Obviously, it is impossible to gloss over the tragic events which cast a pall over one of our Member States this summer.

IIS Omondi Female

i want my men to work by themselves.

nevertheless we found ourselves once more in the high seat of abundance.

he did not know what went on in the minds of his superiors.

IIS Omondi Male

i want my men to work by themselves.

nevertheless we found ourselves once more in the high seat of abundance.

he did not know what went on in the minds of his superiors.

Acknowledgements

We thank all participants of our listening test. Parts of this work have been supported by the SPEAKER project (FKZ 01MK20011A), funded by the German Federal Ministry for Economic Affairs and Climate Action. In addition, this work was supported by the Free State of Bavaria in the DSAI project. The International Audio Laboratories Erlangen are a joint institution of the Friedrich-Alexander-Universität Erlangen-Nürnberg (FAU) and Fraunhofer Institute for Integrated Circuits IIS. The authors gratefully acknowledge the technical support and HPC resources provided by the Erlangen National High Performance Computing Center (NHR@FAU) of the FAU.

References

- Prachi Govalkar, Ahmed Mustafa, Nicola Pia, Judith Bauer, Metehan Yurt, Yigitcan Özer, and Christian Dittmar

A Lightweight Neural TTS System for High-quality German Speech Synthesis

In Proceedings of the ITG Conference on Speech Communication: 39–43, 2021.@inproceedings{GovalkarEtAl21_LightweightTTS_ITG, address = {virtual}, author = {Prachi Govalkar and Ahmed Mustafa and Nicola Pia and Judith Bauer and Metehan Yurt and Yigitcan {\"{O}}zer and Christian Dittmar}, booktitle = {Proceedings of the {ITG} Conference on Speech Communication}, pages = {39--43}, title = {A Lightweight Neural {TTS} System for High-quality {G}erman Speech Synthesis}, url = {https://ieeexplore.ieee.org/document/9657503}, year = {2021} } - Takuhiro Kaneko, Shinji Takaki, Hirokazu Kameoka, and Junichi Yamagishi

Generative Adversarial Network-Based Postfilter for STFT Spectrograms

In Proceedings of the Annual Conference of the International Speech Communication Association (Interspeech): 3389–3393, 2017.@inproceedings{KanekoEtAl17_postfilter_Interspeech, address = {Stockholm, Sweden}, author = {Takuhiro Kaneko and Shinji Takaki and Hirokazu Kameoka and Junichi Yamagishi}, booktitle = {Proceedings of the Annual Conference of the International Speech Communication Association (Interspeech)}, pages = {3389--3393}, title = {Generative Adversarial Network-Based Postfilter for {STFT} Spectrograms}, year = {2017} } - Paarth Neekhara, Chris Donahue, Miller S. Puckette, Shlomo Dubnov, and Julian J. McAuley

Expediting TTS Synthesis with Adversarial Vocoding

In Proceedings of the Annual Conference of the International Speech Communication Association (Interspeech): 186–190, 2019.@inproceedings{Neekhara19_ExpeditingTTS_Interspeech, address = {Graz, Austria}, author = {Paarth Neekhara and Chris Donahue and Miller S. Puckette and Shlomo Dubnov and Julian J. McAuley}, booktitle = {Proceedings of the Annual Conference of the International Speech Communication Association (Interspeech)}, pages = {186--190}, title = {Expediting {TTS} Synthesis with Adversarial Vocoding}, year = {2019} } - Yi Ren, Xu Tan, Tao Qin, Zhou Zhao, and Tie-Yan Liu

Revisiting Over-Smoothness in Text to Speech

In Proceedings of Annual Meeting of the Association for Computational Linguistics (ACL): 8197–8213, 2022.@inproceedings{RenEtAl22_Revisiting_ACL, address = {Dublin, Ireland}, author = {Yi Ren and Xu Tan and Tao Qin and Zhou Zhao and Tie{-}Yan Liu}, booktitle = {Proceedings of Annual Meeting of the Association for Computational Linguistics ({ACL})}, pages = {8197--8213}, title = {Revisiting Over-Smoothness in Text to Speech}, year = {2022} }