RT-PAD-VC – Creative applications of neural voice conversion as an audio effect

This is the accompanying website for the following demo paper:

- Paolo Sani, Edgar Andres Suarez Guarnizo, Kishor Kayyar Laskhminarayana, and Christian Dittmar

RT-PAD-VC – Creative applications of neural voice conversion as an audio effect

In Submitted to the International Conference on Digital Audio Effects (DAFx), 2025.@inproceedings{KalitaDSZHP24_PAD-VC_IWAENC, author = {Paolo Sani and Edgar Andres Suarez Guarnizo and Kishor Kayyar Laskhminarayana and Christian Dittmar}, title = {{RT-PAD-VC} – {Creative} applications of neural voice conversion as an audio effect}, booktitle = {Submitted to the International Conference on Digital Audio Effects ({DAFx})}, address = {Ancona, Italy}, year = {2025}, }

Abstract

Streaming-enabled voice conversion (VC) bears the potential for many creative applications as an audio effect. This demo paper details our low-latency, real-time implementation of the recently proposed Prosody-aware Decoder Voice Conversion (PAD-VC). Building on this technical foundation, we explore and demonstrate diverse use cases in creative processing of speech and vocal recordings. Enabled by it’s voice cloning capabilities and fine-grained controllability, RT-PAD-VC can be used as a real-time audio effects processor for gender conversion, timbre and formant-preserving pitch-shifting, vocal harmonization and cross-synthesis from musical instruments. The on-site demo setup will allow participants to interact in a playful way with our technology.

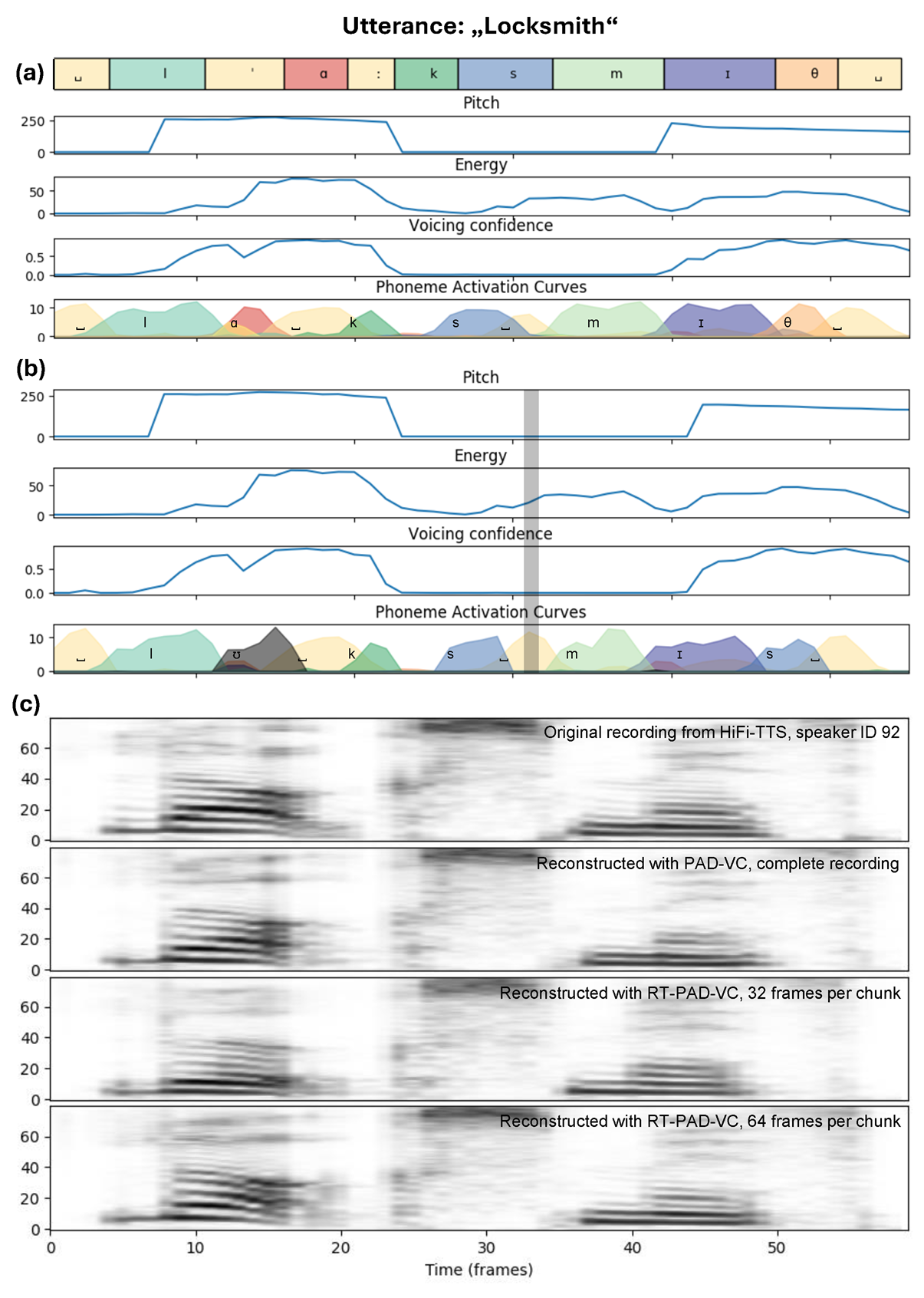

Audio examples demonstrating the impact of chunk-wise voice conversion

Here, we provide audio samples illustrating the effect of too short chunk sizes in the streaming processing of RT-PAD-VC. We also include an extended version of Figure 2 from our demo paper with a playhead that is in sync with the audio examples. We reconstruct the utterance "Locksmith", in this case the source speaker is the same as the target speaker. The prosody and PPG features extracted from the complete utterance are shown in (a). The prosody and PPG features extracted with a chunk sizes of 32 frames are shown in (b). Finally, the resulting mel-spectrograms of the original and three synthesized utterances are shown in (c).

Audio examples demonstrating gender conversion

Here, we show how PAD-VC can be used to convert from a male source voice timbre to a female target voice timbre and vice-versa, while allowing independent control over the pitch trajectory.

Audio examples demonstrating formant- and timbre-preserving pitch shifting

Here, we provide audio samples illustrating the capability of PAD-VC to perform formant- and timbre-preserving pitch shifting when the source and target speaker identities are the same. We use a short utterance from HiFi-TTS, speaker ID 92 and shift its pitch by +/- 4 semitones in steps of 2 semitones.

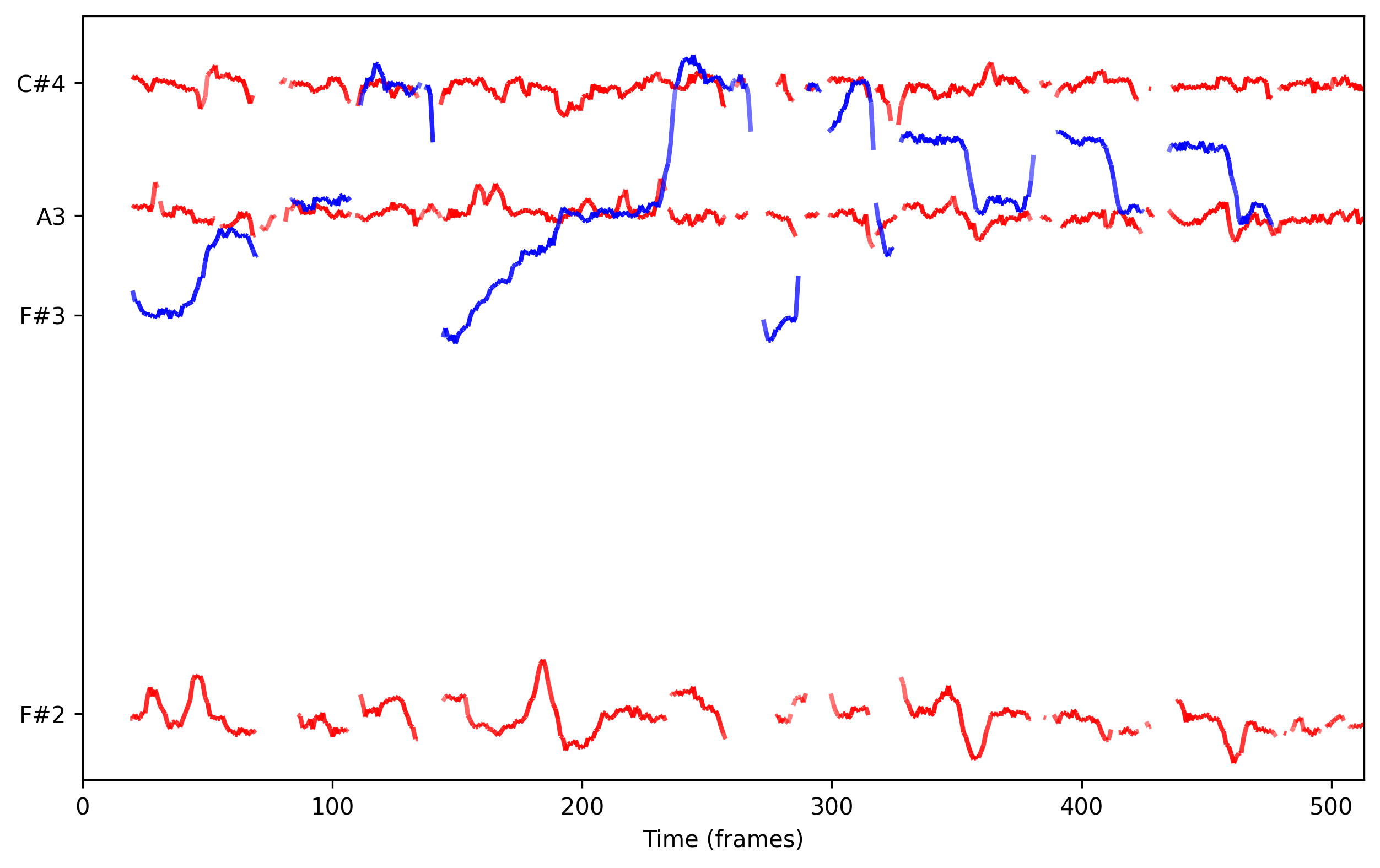

Audio examples demonstrating singing voice conversion and vocal harmonization

Here, we provide audio samples illustrating the capability of PAD-VC to synthesize singing voice despite being trained with speech recordings only. Especially the last example illustrates how PAD-VC can be used to harmonize to an existing singing voice recording. In this case, we convert the original melody sung by Suzanne Vega into three additional voices, for the root, minor third and fifth of the F#m chord.

Audio examples demonstrating cross synthesis from musical instruments

Here, we provide audio samples illustrating the capability of PAD-VC to convert inputs that are neither speech nor singing voice into interesting, speech like sounds.

Acknowledgements

This research was partially supported by the Free State of Bavaria in the DSAI project and by the Fraunhofer-Zukunftsstiftung.

References

- Kalita, Arunava Kr., Dittmar, Christian, Sani, Paolo, Zalkow, Frank, Habets, Emanuël A. P., and Patra, Rusha

PAD-VC: A Prosody-Aware Decoder for Any-to-Few Voice Conversion

In Proc. of the Int. Workshop on Acoustic Signal Enhancement (IWAENC): 389–393, 2024. DOI@INPROCEEDINGS{KalitaDSZH24_PAD-VC_IWAENC, author={Kalita, Arunava Kr. and Dittmar, Christian and Sani, Paolo and Zalkow, Frank and Habets, Emanu{\"e}l A. P. and Patra, Rusha}, title={{PAD-VC}: A Prosody-Aware Decoder for Any-to-Few Voice Conversion}, year={2024}, pages={389--393}, booktitle = {Proc. of the Int. Workshop on Acoustic Signal Enhancement ({IWAENC})}, doi={10.1109/IWAENC61483.2024.10694576} } - Cameron Churchwell, Max Morrison, and Bryan Pardo

High-Fidelity Neural Phonetic Posteriorgrams

In Proc. of the Annual Conf. of the Int. Speech Communication Association (Interspeech): 4287–4291, 2024.@inproceedings{ChurchwellEtAl24_NeuralPPG_ICASSP, title = {High-Fidelity Neural Phonetic Posteriorgrams}, author = {Cameron Churchwell and Max Morrison and Bryan Pardo}, year = {2024}, booktitle = {Proc. of the Annual Conf. of the Int. Speech Communication Association (Interspeech)}, address = {Seoul, Korea}, pages = {4287--4291} } - Pablo Pérez Zarazaga, Zofia Malisz, Gustav Eje Henter, and Lauri Juvela

Speaker-independent neural formant synthesis

In Proc. of the Annual Conf. of the Int. Speech Communication Association (Interspeech): 5556–5560, 2023.@inproceedings{PerezMHJ23_NeuralFormatSynthesis_Interspeech, title = {Speaker-independent neural formant synthesis}, author = {Pablo Pérez Zarazaga and Zofia Malisz and Gustav Eje Henter and Lauri Juvela}, year = {2023}, booktitle = {Proc. of the Annual Conf. of the Int. Speech Communication Association (Interspeech)}, pages = {5556--5560}, address = {Dublin, Ireland}, } - Berrak Sisman, Junichi Yamagishi, Simon King, and Haizhou Li

An overview of voice conversion and its challenges: From statistical modeling to deep learning

IEEE/ACM Transactions on Audio, Speech, and Language Processing, 29: 132–157, 2020.@article{SismanEtAl20_VoiceConversionverview_TASLP, title = {An overview of voice conversion and its challenges: {F}rom statistical modeling to deep learning}, author={Berrak Sisman and Junichi Yamagishi and Simon King and Haizhou Li}, journal={{IEEE}/{ACM} Transactions on Audio, Speech, and Language Processing}, volume={29}, pages={132--157}, year={2020}, } - Yang, Yang, Kartynnik, Yury, Li, Yunpeng, Tang, Jiuqiang, Li, Xing, Sung, George, and Grundmann, Matthias

STREAMVC: Real-Time Low-Latency Voice Conversion

In Proc. of the IEEE Int. Conf. on Acoustics, Speech and Signal Processing (ICASSP): 11016–11020, 2024.@inproceedings{YangEtAl24_StreamVC_ICASSP, author={Yang, Yang and Kartynnik, Yury and Li, Yunpeng and Tang, Jiuqiang and Li, Xing and Sung, George and Grundmann, Matthias}, booktitle={Proc. of the {IEEE} Int. Conf. on Acoustics, Speech and Signal Processing ({ICASSP})}, title={STREAMVC: Real-Time Low-Latency Voice Conversion}, year={2024}, pages={11016--11020}, address = {Seoul, Korea}, } - Anders Riddersholm Bargum, Simon Lajboschitz, and Cumhur Erkut

RAVE for Speech: Efficient Voice Conversion at High Sampling Rates

In Proc. of the Int. Conf. on Digital Audio Effects (DAFx): 41–48, 2024.@inproceedings{BargumLE24_RAVE_for_Speech_DAFx, author = {Anders Riddersholm Bargum and Simon Lajboschitz and Cumhur Erkut}, title = {{RAVE} for Speech: Efficient Voice Conversion at High Sampling Rates}, booktitle = {Proc. of the Int. Conf. on Digital Audio Effects ({DAFx})}, address = {Guildford, UK}, year = {2024}, pages = {41--48}, } - Shahan Nercessian, Russell McClellan, Cory Goldsmith, Alex M. Fink, and and Nicholas LaPenn

Real-time Singing Voice Conversion Plug-in

In Proc. of the Int. Conf. on Digital Audio Effects (DAFx), 2023.@inproceedings{NercessianCGFP23_RealtimeSVC_DAFx, author = {Shahan Nercessian and Russell McClellan and Cory Goldsmith and Alex M. Fink and and Nicholas LaPenn}, title = {Real-time Singing Voice Conversion Plug-in}, booktitle = {Proc. of the Int. Conf. on Digital Audio Effects ({DAFx})}, address = {Copenhagen, Denmark}, year = {2023}, } - Paolo Sani, Judith Bauer, Frank Zalkow, Emanuël A. P. Habets, and Christian Dittmar

Improving the Naturalness of Synthesized Spectrograms for TTS Using GAN-Based Post-Processing

In Proc. of the ITG Conf. on Speech Communication: 270–274, 2023.@inproceedings{SaniEtAl23_PostProcessingGAN_ITG, address = {Aachen, Germany}, author = {Paolo Sani and Judith Bauer and Frank Zalkow and Emanu{\"e}l A. P. Habets and Christian Dittmar}, booktitle = {Proc. of the {ITG} Conf. on Speech Communication}, pages = {270--274}, title = {Improving the Naturalness of Synthesized Spectrograms for {TTS} Using {GAN}-Based Post-Processing}, year = {2023} } - Keith Ito and Linda Johnson

The LJ Speech Dataset

https://keithito.com/LJ-Speech-Dataset/, 2017.@misc{ItoJohnson17_LJSpeech_ONLINE, author = {Keith Ito and Linda Johnson}, howpublished = {\url{https://keithito.com/LJ-Speech-Dataset/}}, title = {The {LJ} Speech Dataset}, year = {2017} } - Evelina Bakhturina, Vitaly Lavrukhin, Boris Ginsburg, and Yang Zhang

Hi-Fi Multi-speaker english TTS dataset

In Proc. of the Annual Conf. of the Int. Speech Communication Association (Interspeech): 2776–2780, 2021.@inproceedings{bakhturina21_interspeech_HiFi, author={Evelina Bakhturina and Vitaly Lavrukhin and Boris Ginsburg and Yang Zhang}, title={{Hi-Fi} Multi-speaker english {TTS} dataset}, year=2021, booktitle={Proc. of the Annual Conf. of the Int. Speech Communication Association (Interspeech)}, pages={2776--2780}, } - Bonafonte, A., Höge, H., Kiss, I., Moreno, A., Ziegenhain, U., van den Heuvel, H., Hain, H.-U., Wang, X. S., and Garcia, M. N.

TC-STAR: Specifications of Language Resources and Evaluation for Speech Synthesis

In Proc. of the Int. Conf. on Language Resources and Evaluation (LREC), 2006.@inproceedings{bonafonte06-elra_tc, title = {{TC}-{STAR}: Specifications of Language Resources and Evaluation for Speech Synthesis}, author = {Bonafonte, A. and H{\"o}ge, H. and Kiss, I. and Moreno, A. and Ziegenhain, U. and van den Heuvel, H. and Hain, H.-U. and Wang, X. S. and Garcia, M. N.}, booktitle = {Proc. of the Int. Conf. on Language Resources and Evaluation ({LREC})}, month = {may}, year = {2006}, }