Beamformer-Guided Target Speaker Extraction

M. Elminshawi, S. Chetupalli, and E. A. P. Habets

International Conference on Acoustics, Speech, & Signal Processing (ICASSP) 2023.

Abstract

We propose a Beamformer-guided Target Speaker Extraction (BG-TSE) method to extract a target speaker’s voice from a multi-channel recording informed by the direction of arrival of the target. The proposed method employs a front-end beamformer steered towards the target speaker to provide an auxiliary signal to a single-channel TSE system. By allowing for time-varying embeddings in the single-channel TSE block, the proposed method fully exploits the correspondence between the front-end beamformer output and the target speech in the microphone signal. Experimental evaluation on simulated 2-speaker reverberant mixtures from the WHAMR! dataset demonstrates the advantage of the proposed method compared to recent single-channel and multi-channel baselines.

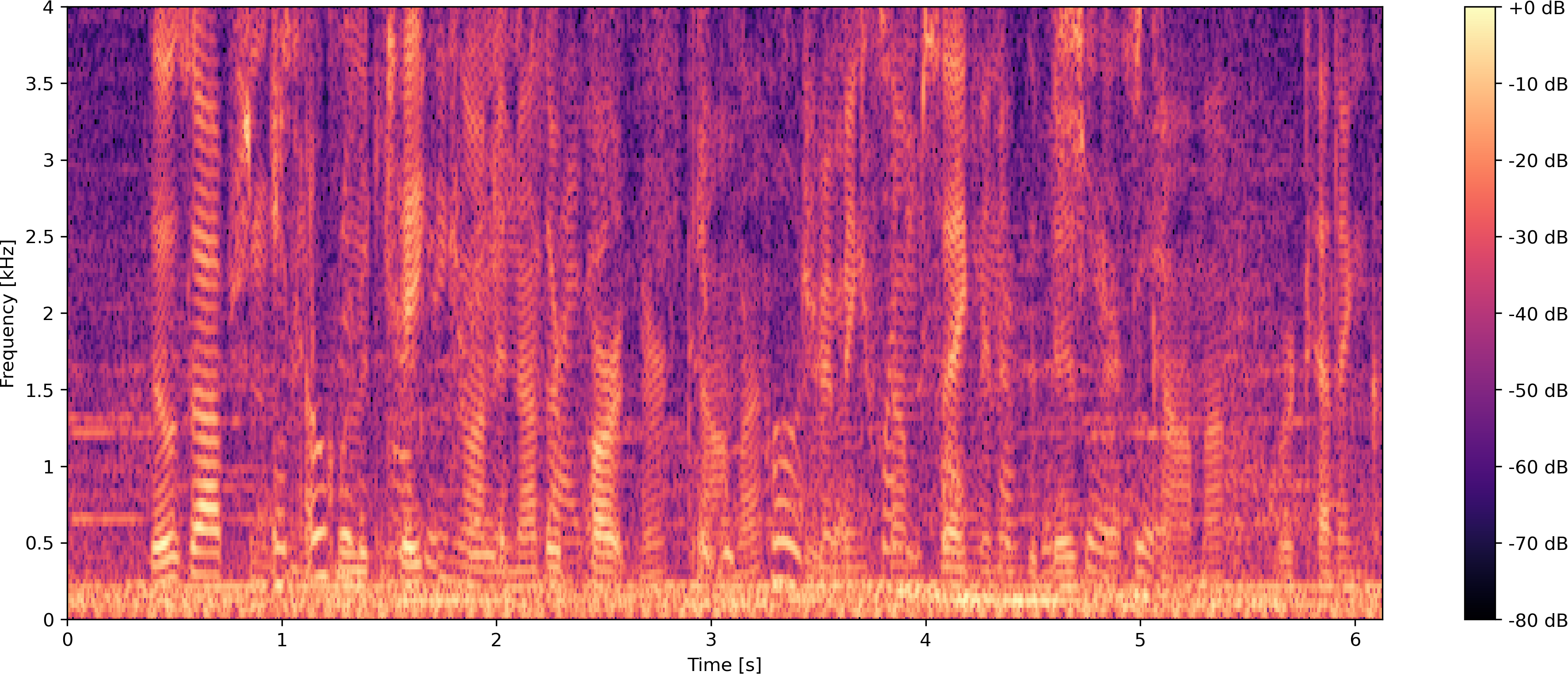

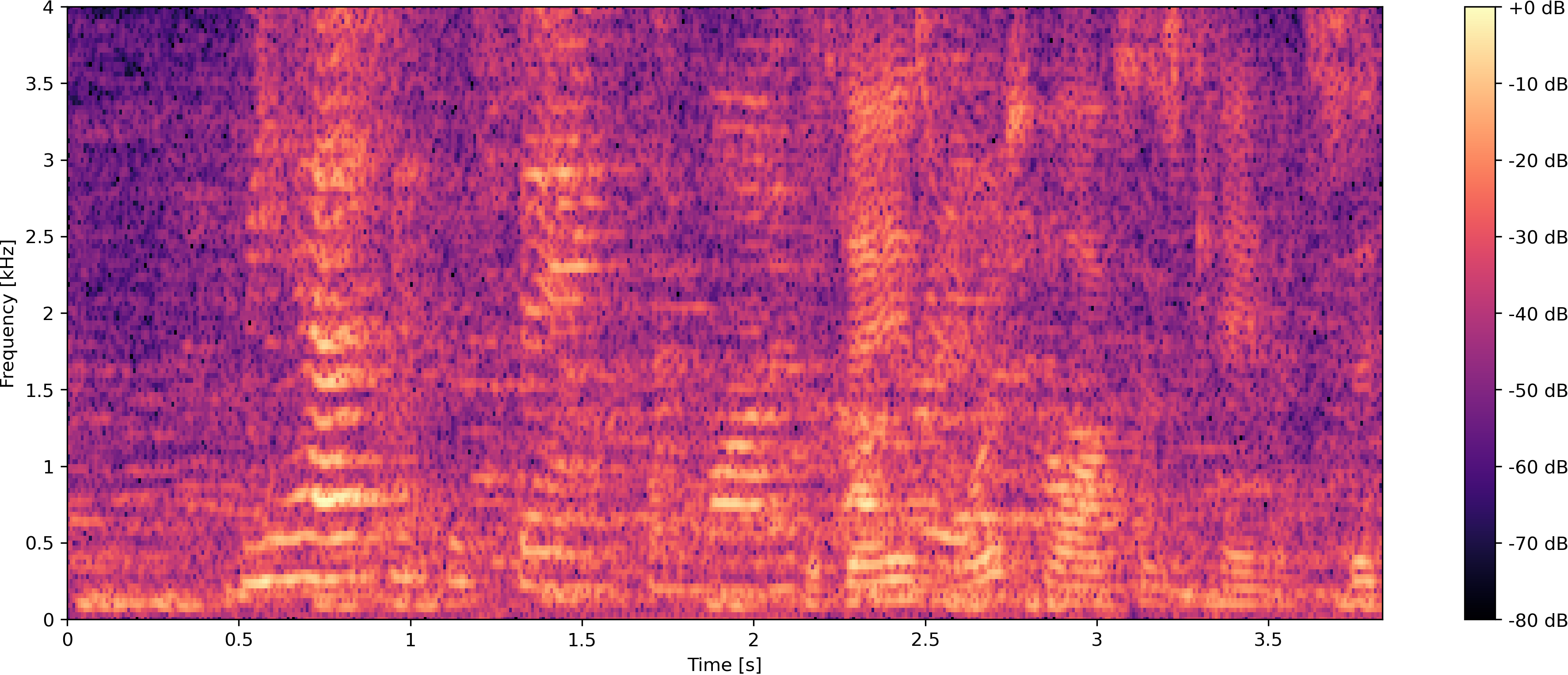

Audio Examples (Matched Condition)

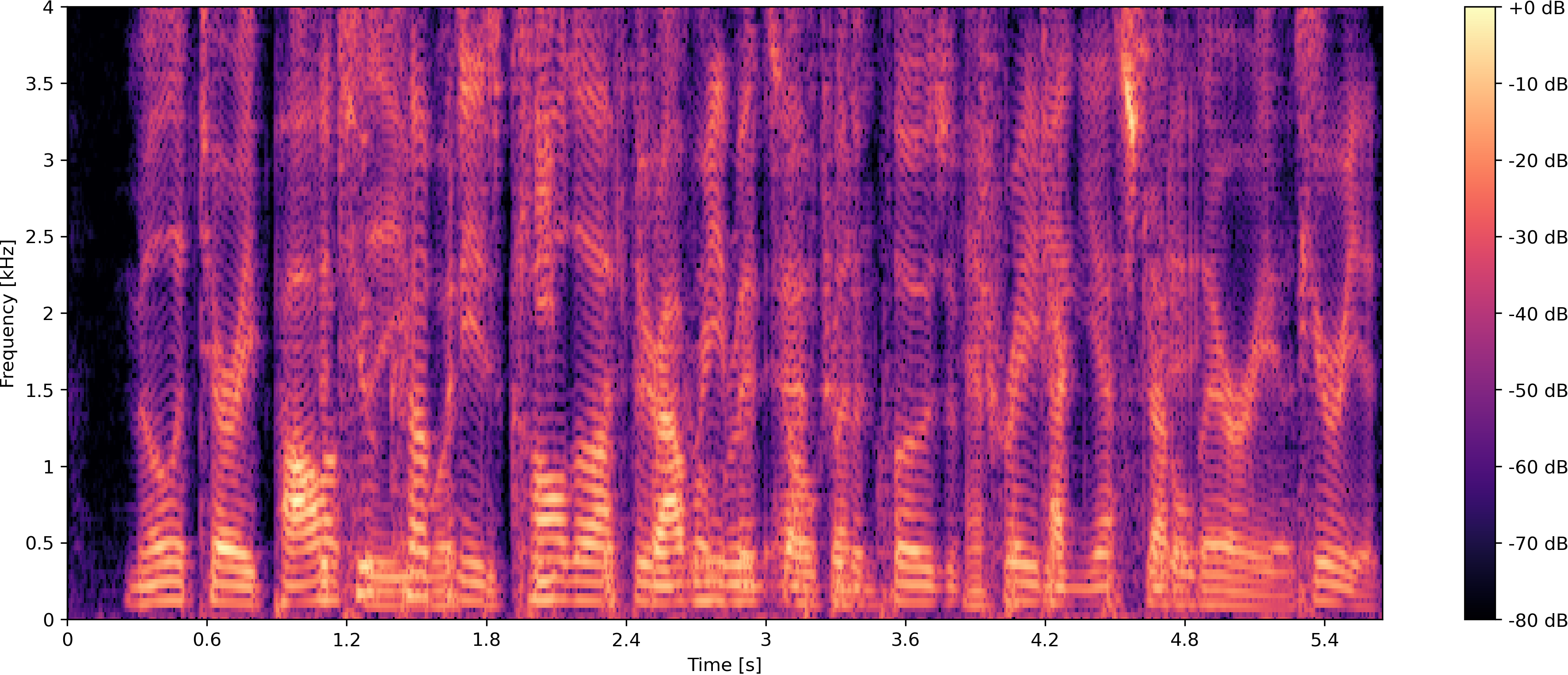

Example 1

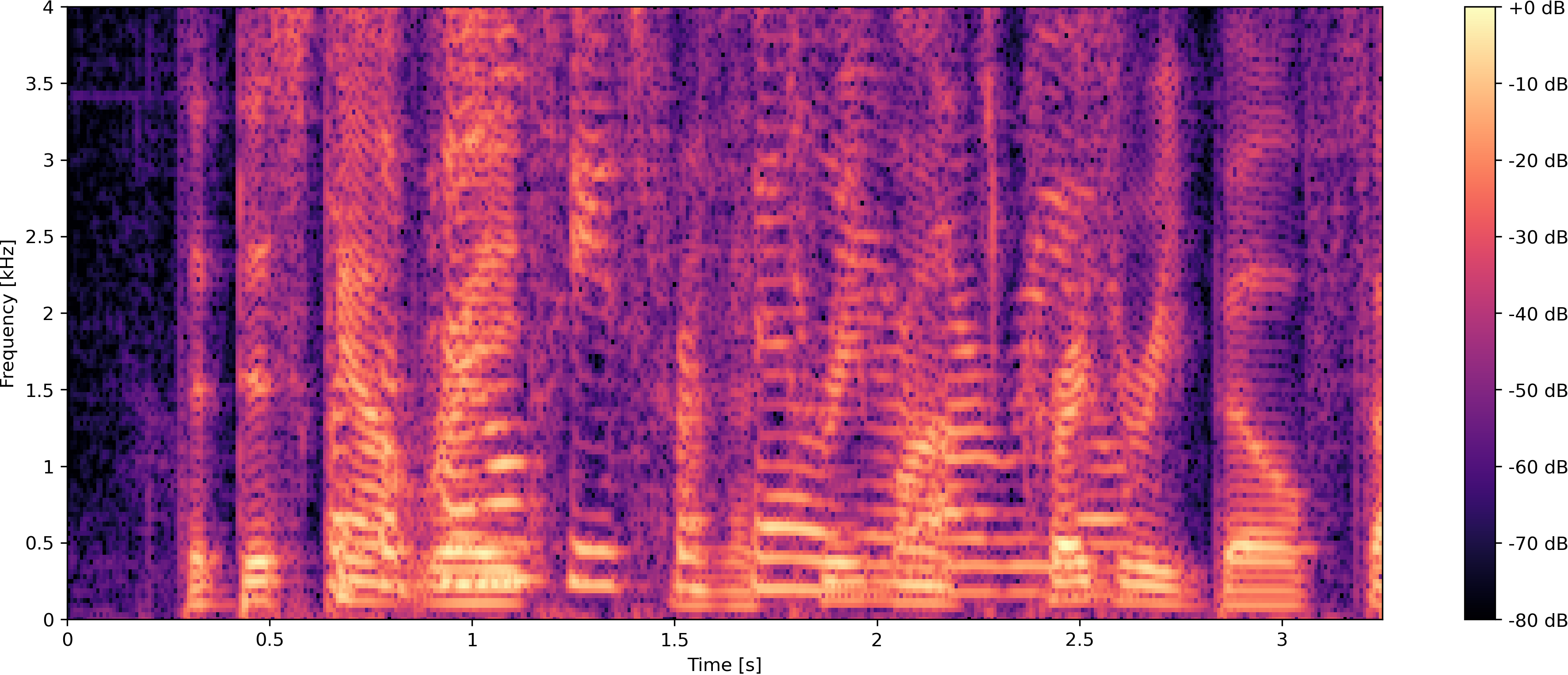

Example 2

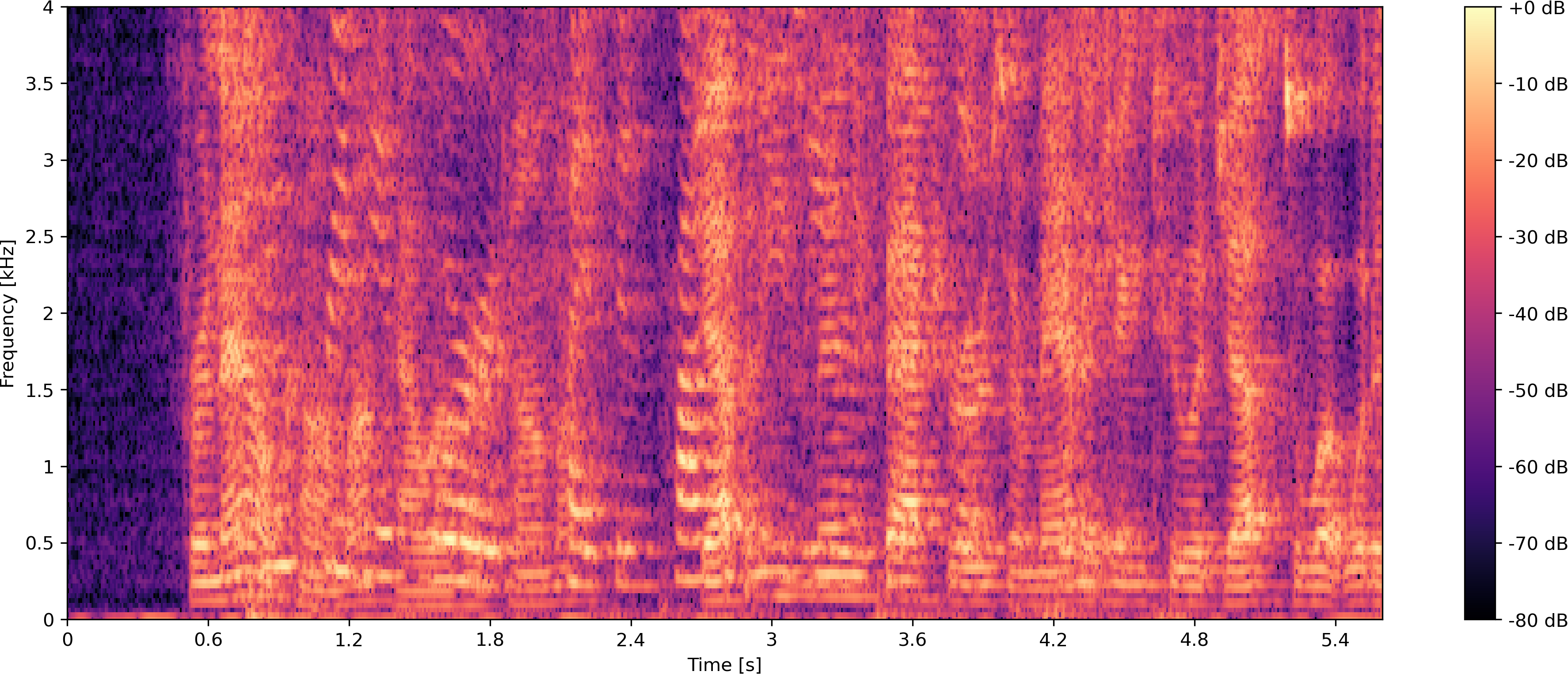

Example 3

Audio Examples (Unmatched Condition)

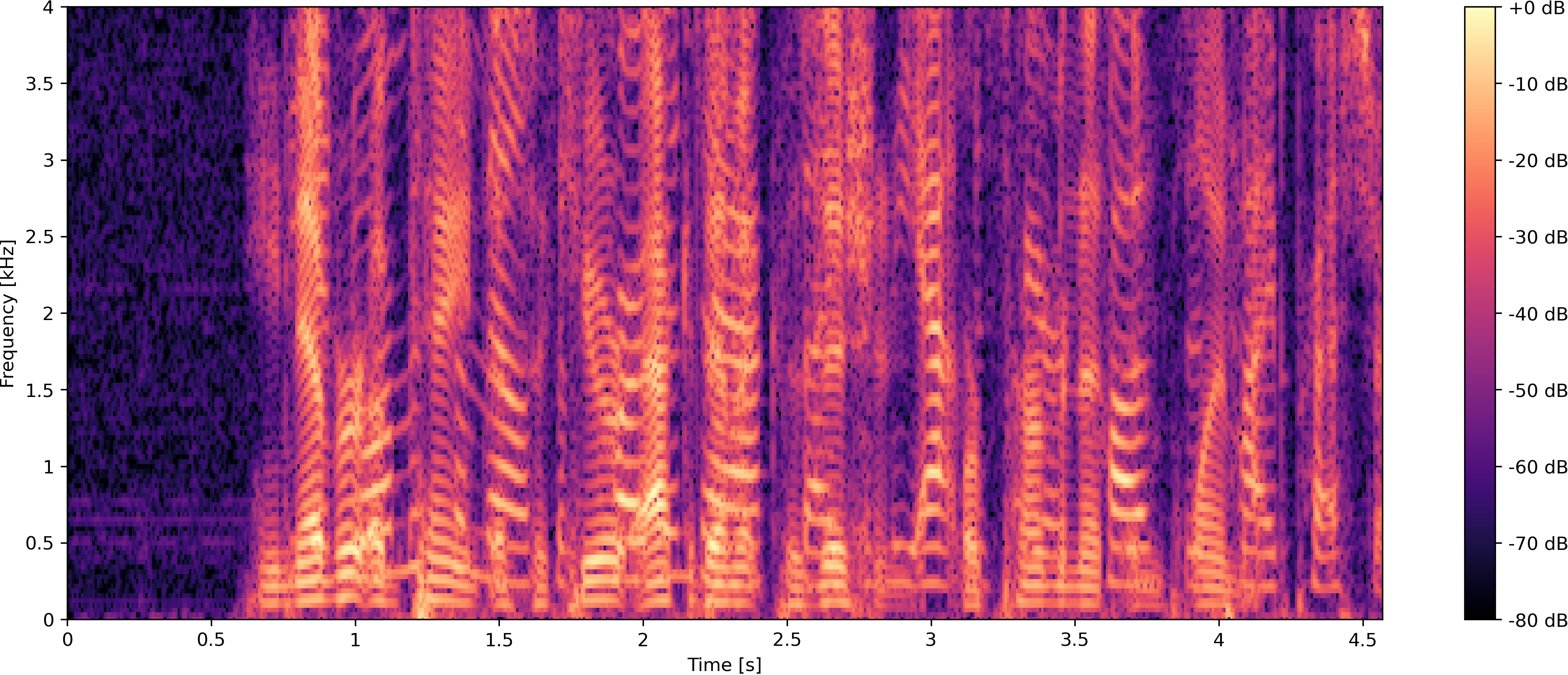

Example 1

Example 2

Example 3

References

[1] M. Delcroix, T. Ochiai, K. Zmolikova, K. Kinoshita, N. Tawara, T. Nakatani, and S. Araki, “Improving speaker discrimination of target speech extraction with time-domain speakerbeam,” in Proc. IEEE Intl. Conf. on Ac., Sp. and Sig. Proc. (ICASSP), 2020, pp. 691–695.

[2] R. Gu, and Z. Yuexian, "Temporal-spatial neural filter: Direction informed end-to-end multi-channel target speech separation", arXiv preprint arXiv:2001.00391 (2020).