Low Resource Text-to-Speech Using Specific Data and Noise Augmentation

Kishor Kayyar Lakshminarayana, Christian Dittmar, Nicola Pia, Emanuël A.P. Habets

Presented at the EUSIPCO, Helsinki, Finland, 2023

Click here for the paper.

Abstract

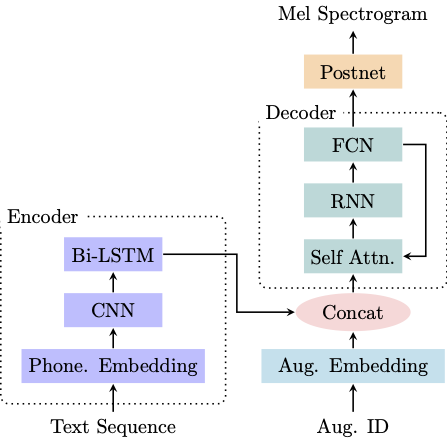

Many neural text-to-speech architectures can synthesize nearly natural speech from text inputs. These architectures typically need to be trained with tens of hours of annotated and high-quality speech data. A lot of time and effort is required to compile such large databases for every new voice. In this paper, we describe a method to extend the popular Tacotron-2 architecture and its training with data augmentation to enable single-speaker synthesis using a limited amount of specific training data. In contrast to elaborate augmentation methods proposed in the literature, we use simple stationary noises for data augmentation. Our extension is easy to implement and adds almost no computational overhead during training and inference. Using only two hours of training data, our approach was rated by human listeners to be on par with the baseline Tacotron-2 trained with 23.5 hours of LJSpeech data. In addition, we tested our model with a semantically unpredictable sentences test, which showed that both models exhibit similar intelligibility levels.

Proposed Method

EUSIPCO 2023 Presentation

Audio Examples

The following audio examples are synthesized with the baseline Tacotron-2 [1] or the proposed Tacotron-2 with augmentation embedding for different training scenarios.

- FULL: Baseline model trained with FULL 24 hours of LJSpeech Database [2].

- 2H_IS_NA: Proposed model trained with 2 hours of informed training set simulating the proposed specification from LJSpeech Database and noise augmentation.

- 2H_IS: Baseline model trained with 2 hours of informed training set simulating the proposed specification from LJSpeech Database.

- 2H_RS_NA: Proposed model trained with 2 hours of random training set from LJSpeech Database and noise augmentation.

- 2H_RS: Baseline model trained with 2 hours of random training set from LJSpeech Database.

The text used to synthesize the samples and the audio examples are below. All the files were synthesized using a pretrained StyleMelGAN vocoder [3].

I was about to do this when cooler judgment prevailed.

To my surprise he began to show actual enthusiasm in my favor.

The lace was of a delicate ivory color, faintly tinted with yellow.

The promoter's eyes were heavy, with little puffy bags under them.

For a full minute he crouched and listened.

But they make the mistake of ignoring their own duality.

arXiv preprint

Click here

References

- Shen, Jonathan, Pang, Ruoming, Weiss, Ron J., Schuster, Mike, Jaitly, Navdeep, Yang, Zongheng, Chen, Zhifeng, Zhang, Yu, Wang, Yuxuan, Skerrv-Ryan, Rj, Saurous, Rif A., Agiomvrgiannakis, Yannis, and Wu, Yonghui

Natural TTS Synthesis by Conditioning Wavenet on MEL Spectrogram Predictions

In Proc. IEEE Intl. Conf. on Acoustics, Speech and Signal Processing (ICASSP): 4779–4783, 2018. DOI@inproceedings{shen_natural_2018, title = {Natural {TTS} Synthesis by Conditioning Wavenet on {MEL} Spectrogram Predictions}, author = {Shen, Jonathan and Pang, Ruoming and Weiss, Ron J. and Schuster, Mike and Jaitly, Navdeep and Yang, Zongheng and Chen, Zhifeng and Zhang, Yu and Wang, Yuxuan and Skerrv-Ryan, Rj and Saurous, Rif A. and Agiomvrgiannakis, Yannis and Wu, Yonghui}, booktitle = {Proc. {IEEE} Intl. Conf. on Acoustics, Speech and Signal Processing (ICASSP)}, year = 2018, pages = {4779--4783}, isbn = {978-1-5386-4658-8}, doi = {10.1109/ICASSP.2018.8461368}, } - Keith Ito and Linda Johnson

The LJ Speech Dataset

https://keithito.com/LJ-Speech-Dataset/, 2017].@misc{ljspeech17, title = {The {LJ} {Speech} Dataset}, author = {Keith Ito and Linda Johnson}, howpublished = {\url{https://keithito.com/LJ-Speech-Dataset/}}, year = {2017] } - Mustafa, Ahmed, Pia, Nicola, and Fuchs, Guillaume

StyleMelGAN: An efficient high-fidelity adversarial vocoder with temporal adaptive normalization

In Proc. IEEE Intl. Conf. on Acoustics, Speech and Signal Processing (ICASSP): 6034–6038, 2021.@inproceedings{mustafa2021stylemelgan, title={{StyleMelGAN}: An efficient high-fidelity adversarial vocoder with temporal adaptive normalization}, author={Mustafa, Ahmed and Pia, Nicola and Fuchs, Guillaume}, booktitle={Proc. {IEEE} Intl. Conf. on Acoustics, Speech and Signal Processing (ICASSP)}, pages={6034--6038}, year={2021} }