Artifacts in frequency and their variation in time ("Birdies")

Author: Antonio Pena

Co-Author: Enrique Alexandre

Background: pattern detection and timbre

A perceptual audio codec includes, by definition, a model of the human auditory system [4]. Most basic codecs derive a masking threshold estimation to determine the highest level of noise that will be allowed at a certain time-frequency (t-f) position while not being audible. Other measures, such as loudness or pitch, may also be part of the model. These kinds of models resemble the auditory Interface (external, middle and inner ear), without considering the higher level processing to be carried out by the brain.

The high-level auditory processing seems to divide the sound input into a collection of independent items (auditory objects), and organize these items into several sets (streams) [2]: For example, a tune containing both a violin and a flute playing at the same time would contain many audio objects (every single note played) and two streams, one for the notes played by the violin and another for the notes coming from the flute. In this way, every object would be assigned to a certain given stream (auditory streaming) by the cognitive processing.

Human perception (audition, vision, etc....) seems to be driven by a set of basic rules pointed out in the Gestalt Psychology texts (Germany, beginning of the twentieth century [3]). The most relevant rules are:

- Similarity: elements are grouped if similar.

- Good continuation: a smooth change keeps the elements grouped in the same set.

- Common fate: elements that change at the same time are grouped into the same set.

- Continuity: even if some parts of an acoustic event are lost, previous memory may fill in possible gaps.

- Selective attention: perception can be tuned to a particular stream (analytic) or relaxed in order to perceive something as a single compact set (synthetic).

This behaviour probably makes use of both physical (tracking of frequency components, time onsets, temporal and frequency envelopes, etc....) and psychological (presence of other sources, previous knowledge, images or other events associated to the acoustic excerpt, etc....) cues to process all this information together.

Timbre can be understood as the whole set of characteristics that remain once pitch, loudness and duration are extracted. Then the auditory streaming assembles some of the t-f components into a single auditory object, taking into account the relationships between them. It is especially remarkable that isolated components create new timbres and thus new auditory objects. On the other hand, continuity preserves timbre and keeps tracking of the temporal evolution of components.

Background: bit assignment and coding bands

The basic core mechanism of an audio coder [5][6] is a set of different quantizers that can be chosen to process a given area of frequency (subband, coding band). This choice is driven by a routine that decides the best possible set of quantizer values, such that subjective quality is optimized under the constraint of the given target bit rate. Increasing quantizer step size for a particular frequency band means more quantization noise injected into the audio signal and lower bit cost. This assignment/optimization is time-varying (frame-by-frame) and uses perceptual information (masking thresholds). One of the available quantizers is the null one (zero bits assigned and, so, the quantized components are lost/set to zero).

Sound examples: timbre vs. some typical bandwidth limitations

Timbre characteristics depend strongly on frequency, in addition to time structure. Some sound sources hold their main characteristics in the first 4 or 5 kHz (e.g. speech), and most of their sounds are clearly recognizable with such a limited bandwidth (e.g. classic telephone bandwidth 3.4kHz). High quality speech requires a higher bandwidth, such as 7 or 8 kHz (wide-band speech) or 14k Hz (super wide-band speech). Other types of sounds (e.g. music) call for a much higher bandwidth, becoming unrecognizable or strongly degraded, if the bandwidth is limited. Let us listen to the first two examples:

Glockenspiel: This set of signals has been lowpass filtered with varying bandwidth. It is a percussive sound but its tonal nature preserves part of the timbre components in spite of bandwidth truncation (listen to the last four notes even at 4 kHz low pass filtering).

- Play Glocken

- Original (bw: 20 kHz )

- Play Glocken_12k

- 12 kHz low pass filtered

- Play Glocken_8k

- 8 kHz low pass filtered

- Play Glocken_4k

- 4 kHz low pass filtered

Funky sound: Please note the cymbals set when listening to this example. As you will notice, the bandwidth truncation rapidly blurs the characteristic timbre and the cymbals almost disappear as a perceived instrument when the filtering is more severe. On the other hand, bass and keyboards do not suffer so much with the reduction of high frequency components.

- Play Funky

- Original (bw: 20 kHz )

- Play Funky_12k

- 12 kHz low pass filtered

- Play Funky_8k

- 8 kHz low pass filtered

- Play Funky_4k

- 4 kHz low pass filtered

It is not difficult to order these funky sounds in a decreasing bandwidth ordering but, what about the subsequent ones? Try to order these Glockenspiel sounds:

Play GlockenA Play GlockenB Play GlockenC Play GlockenD

Sound examples: some typical bandwidth variations through time (the birdies artifact)

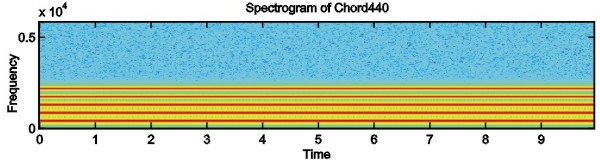

Consider the synthetic signal Chord 440 (Figure 1a) as the next example. It contains a fundamental "A" note (440 Hz) plus four harmonics. The five tones fuse together in a perfect octave consonance, that is, a single perceived stream.

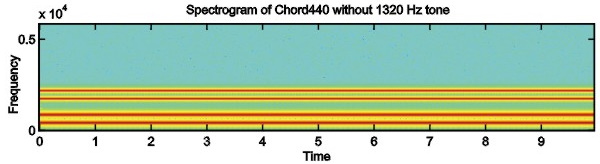

When suppressing the 1320 Hz harmonic, the four remaining tones fuse together in a perfect octave consonance, but with a different timbre (Figure 1b). Moreover, as the energy from the cancelled harmonic is not present, perceived loudness has reduced but just one stream is still clearly perceived.

Play Chord 440 without 1320 Hz tone

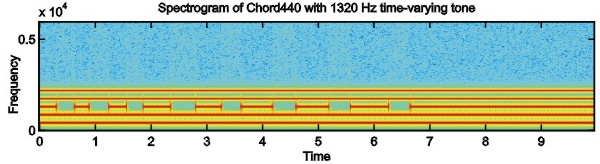

In the next example, we variably suppress the 1320 Hz harmonic here and there, making it appear and disappear from the sound (Figure 1c). Now the 1320 Hz tone stands out as a distinct item, clearly separated from the remaining four stationary tones that form the chord and thus two separate streams are perceived. When listening to the last 3 seconds, you will probably perceive how the tone fuses again within the consonant chord once it becomes again stationary again.

Play Chord 440 with time-varying 1320 Hz time-varying tone

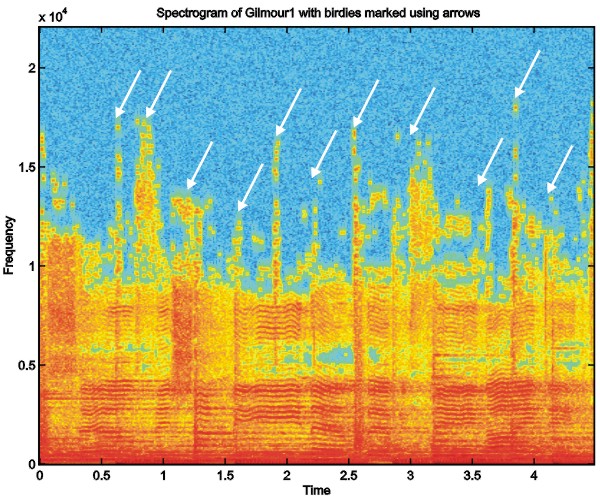

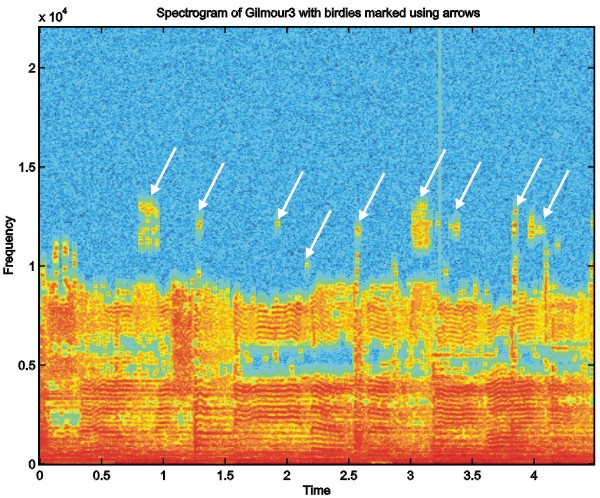

The most frequent perceptual measure in audio coding is the masking threshold, which is usually computed frame by frame. Slight variations of the masking threshold from one frame to the next, however, may lead to very different quantizer settings when the bit rate is low, as a result, some groups of spectral coefficients may appear and disappear. These appearing and disappearing spectral regions constitute extra auditory objects, which are separate from the main one and are thus clearly perceived. They lead to a type of artifact that is known as birdies and has been reported both for the tuning of audio codecs [7] and for objective perceptual assessment methods [1].

Consider a music signal that has been coded at very low bitrates, such that strange changes in the upper frequency bands appear. There are three different coded versions, from better to worse, where you clearly perceive some strange high- frequency effects that appear and dissappear, with no specific connection to the original sound.

- Play Gilmour

- Original (bw: 20 kHz )

- Play Gilmour1

- Slightly distorted decoded signal

- Play Gilmour2

- Clearly distorted decoded signal

- Play Gilmour3

- Severely distorted decoded signal

Some of these artifacts appear in the upper portion of the spectrum (see Figure 2) and in some of these cases a bandwidth limitation is recommended to avoid high frequency distortions.

Click on spectrogram to toggle: birdies/bandlimited/original

In other cases, however, the artifacts happen even within the lower part of the spectrum (see Figure 3) and severe bandwidth limitation would be required to achieve sufficient quality in the transmitted audio range. Listen to the following files low pass filtered to 8 kHz and observe that not every coded file has become a birdies-free sound.

Click on spectrogram to toggle: birdies/bandlimited/original

- Play Gilmour_8k

- 8 kHz low pass filtered original

- Play Gilmour1_8k

- Almost birdies-free

- Play Gilmour2_8k

- Slightly worse decoded signal

- Play Gilmour3_8k

- Severely distorted decoded signal

References

[1] J. Beerends and J. Stemerdink, "Modelling a cognitive aspect in the measurement of the quality of music codecs", in Preprint 3800, 96th AES Conv., Amsterdam, 1994.

[2] Albert S. Bregman, "Auditory Scene Analysis: the Perceptual Organization of Sound", MIT Press, 1990.

[3] K. Koffka, "Principles of Gestalt Psychology", Kegan Paul, Trench, Trubner & Co., Ltd., London, 1936.

[4] Brian C.J. Moore, "An Introduction to the Psychology of Hearing", Academic Press, 1989.

[5] T. Painter and A. Spanias, "Perceptual coding of digital audio", Proc. of the IEEE, vol. 88, no.4, pp. 451-513, April 2000.

[6] M. Bosi and R. E. Goldberg, "Introduction to Digital Audio Coding and Standards", Springer, 2003, ISBN: 978-1-4020-7357-1.

[7] A.S. Pena, "Técnicas de modelado psicoacústico aplicadas a la codificación de audio de muy alta calidad", PhD thesis (in Spanish), Universidad Politécnica de Madrid, 1994.