Following Section 3.3.1 of [Müller, FMP, Springer 2015], we discuss in this notebook some applications, where automated synchronization methods play an important role for supporting the user in experiencing and exploring music. For an overview of similar applications, we refer to the following articles:

-

David Damm, Christian Fremerey, Verena Thomas, Michael Clausen, Frank Kurth, and Meinard Müller: A Digital Library Framework for Heterogeneous Music Collections: From Document Acquisition to Cross-Modal Interaction. International Journal on Digital Libraries: Special Issue on Music Digital Libraries, 12(2-3): 53–71, 2012.

Bibtex -

Masataka Goto and Roger B. Dannenberg: Music Interfaces Based on Automatic Music Signal Analysis: New Ways to Create and Listen to Music. IEEE Signal Processing Magazine, 36(1): 74–81, 2019.

Bibtex -

Meinard Müller, Andreas Arzt, Stefan Balke, Matthias Dorfer, and Gerhard Widmer: Cross-Modal Music Retrieval and Applications: An Overview of Key Methodologies. IEEE Signal Processing Magazine, 36(1): 52–62, 2019.

Bibtex

Music Library Scenario¶

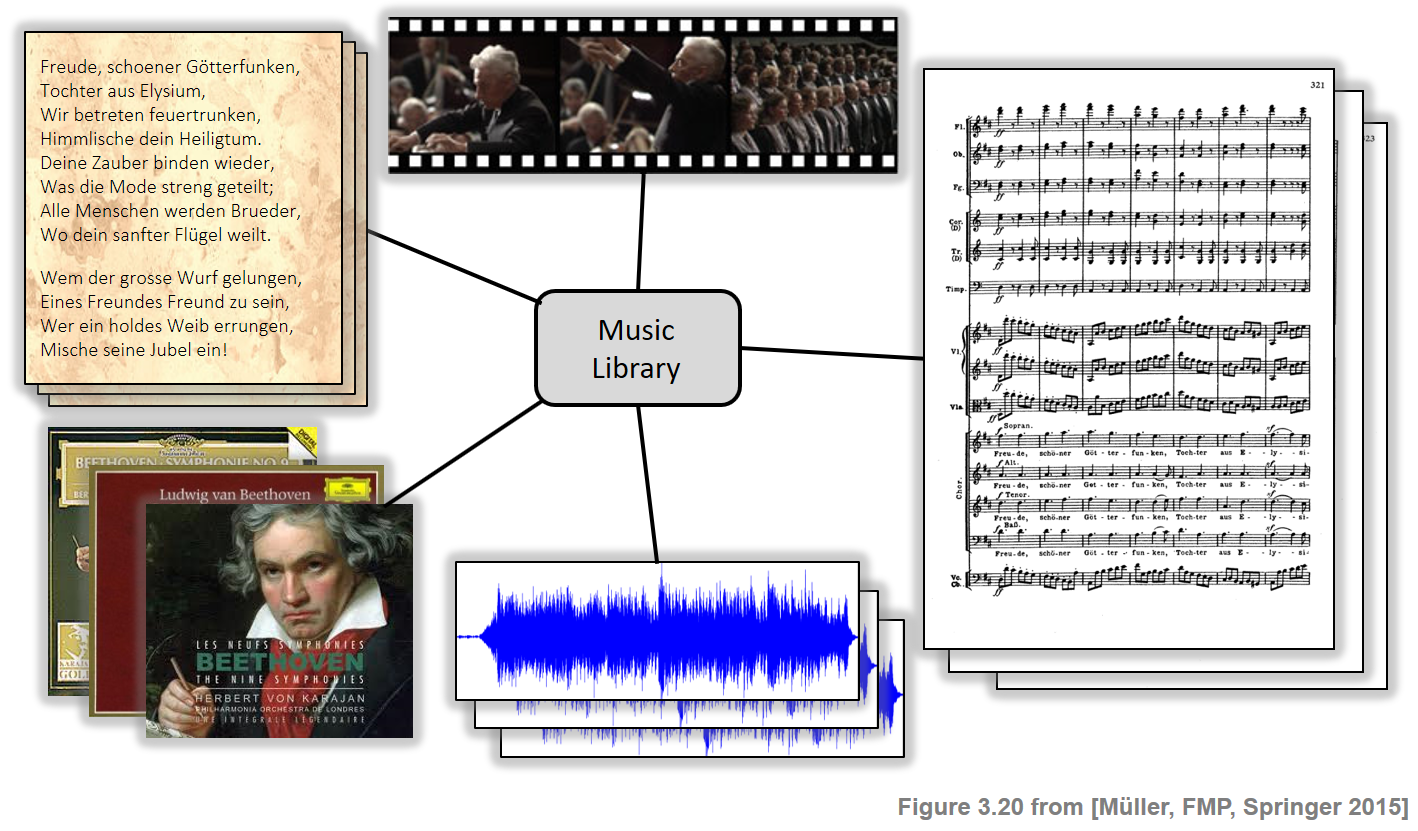

Significant digitization efforts have resulted in large music collections, which comprise music-related documents of various types and formats including text, symbolic data, audio, image, and video expressing musical content at different semantic levels. Modern digital music libraries contain textual data including lyrics and libretti, symbolic data, visual data such as scanned sheet music or CD album covers, as well as music and video recordings of performances. Therefore, beyond the mere recording and digitization of musical data, a key challenge in a real-life library application scenario is to integrate techniques and interfaces to organize, understand, and search musical content in a robust, efficient, and intelligent manner. In this context, music synchronization techniques are one way to automate the generation of cross-links, which can then be used for making musical data better accessible to the user.

Interpretation Switcher Interface¶

As a first scenario, let us consider the case of having many different audio recordings for he same musical work. For example, for Beethoven's Fifth Symphony, a digital music library may contain interpretations by Bernstein and Sawallisch, Liszt's piano transcription of Beethoven's Fifth played by Scherbakov, and some synthesized version generated from a MIDI file. The Interpretation Switcher interface allows a user to select several recordings of the same piece of music, which have previously been synchronized. Each of the recordings is represented by a slider bar indicating the current playback position with respect to the recording's particular timeline. Each timeline encodes absolute timing, where the length of a particular slider bar is proportional to the duration of the respective version. The user may listen to a specific recording by activating a slider bar and then, at any time during playback, seamlessly switch to any of the other versions. This kind of navigation between different documents is also referred to as interdocument navigation. The following video gives an impression on this kind of switching functionality, where the four slider bars correspond to four versions of the first part (the exposition) of Beethoven's Fifth Symphony.

In this example, the Interpretation Switcher also indicates version-dependent annotations below each individual slider bar, where labeled segments are represented by color-coded blocks. In the example above, the three sections correspond to the first theme (blue), the second theme (red), and the end section (green) of the exposition. Based on these annotations, the Interpretation Switcher also facilitates navigation within a given document, where the user can directly jump to the beginning of any structural element simply by clicking on the corresponding block. This kind of navigation is also called intradocument navigation. In combination, inter- and intradocument navigation allow a user to conveniently browse through the different performances of a given musical work and to easily locate, playback, and compare musically interesting passages.

Score Viewer Interface¶

As a second scenario, let us consider a more multimodal setting dealing with audio–visual music data. We have seen that sheet music and audio recordings represent and describe music at different levels of abstraction. Sheet music specifies high-level parameters such as notes, keys, measures, or repetitions in a visual form. Because of its explicitness and compactness, Western music is often discussed and analyzed on the basis of sheet music. In contrast, most people enjoy music by listening to audio recordings, which represent music in an acoustic form. In particular, the nuances and subtleties of musical performances, which are generally not written down in the score, make the music come alive.

In the following, we assume that we have links between the images of sheet music and a corresponding audio recording. The following video shows an Score Viewer Interface for presenting sheet music while playing back associated audio recordings, a functionality that is also often referred to as Score Following. The functionality is illustrated by means of Beethoven's Piano Sonata Op. 13 (Pathétique). When starting audio playback, corresponding measures within the sheet music are synchronously highlighted based on the linking information generated by the synchronization procedure. Additional control elements allow the user to switch between measures of the currently selected musical work. By clicking on a measure, the playback position is changed and the audio recording is resumed at the appropriate time position. Obviously, if more than one music recording is available for the currently selected musical work, the Score Viewer interface may be combined with an Interpretation Switcher.