Following Section 6.2.1 of [Müller, FMP, Springer 2015], we introduce in this noteook the concepts of beat and tempo.

Basic Notions and Assumptions¶

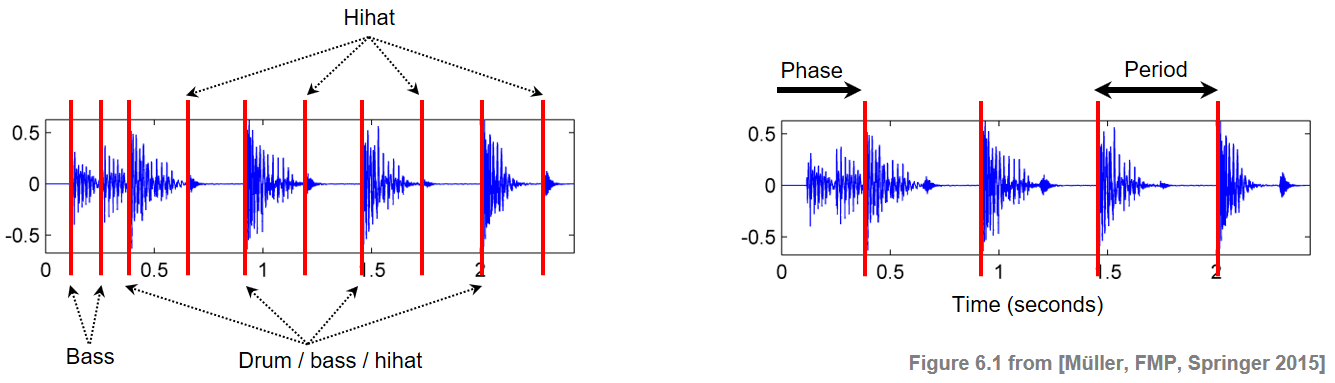

Temporal and structural regularities are perhaps the most important incentives for people to get involved and to interact with music. It is the beat that drives music forward and provides the temporal framework of a piece of music. Intuitively, the beat corresponds to the pulse a human taps along when listening to music. The beat is often described as a sequence of perceived pulse positions, which are typically equally spaced in time and specified by two parameters: the phase and the period. The term tempo refers to the rate of the pulse and is given by the reciprocal of the beat period. The following figure illustrates these notions using the beginning of "Another one bites the dust" by Queen.

The extraction of tempo and beat information from audio recordings is a challenging problem in particular for music with weak note onsets and local tempo changes. For example, in the case of romantic piano music, the pianist often takes the freedom of speeding up and slowing down the tempo—an artistic means also referred to as tempo rubato. There is a wide range of music where the notions of tempo and beat remain rather vague or are even nonexistent. Sometimes, the rhythmic flow of music is deliberately interrupted or disturbed by syncopation, where certain notes outside the regular grid of beat positions are stressed. The following audio example indicate some of the challenges:

Music with weak onsets (Borodin, String Quartet No. 2, 3rd movement):

Romantic music with local tempo fluctuations (rubato) and global tempo changes (Chopin, Op.68, No. 3):

Music with syncopation (Fauré, Op.15):

To make the problem of tempo and beat tracking feasible, most automated approaches rely on two basic assumptions.

- The first assumption is that beat positions occur at note onset positions.

- The second assumption is that beat positions are more or less equally spaced—at least for a certain period of time.

Even though both assumptions may be violated and inappropriate for certain types of music, they are convenient and reasonable for a wide range of music including most rock and popular songs.

In the following code cell, we present for each of the above examples a visualization of a spectral-based novelty function along with manually annotated beat positions (quarter note level). Furthermore, a sonification indicates the annotated beats by short click sounds placed on top of the original music recordings.

import os, sys

import sys

import numpy as np

from scipy import signal

from matplotlib import pyplot as plt

import librosa

import IPython.display as ipd

import pandas as pd

sys.path.append('..')

import libfmp.b

import libfmp.c2

import libfmp.c6

%matplotlib inline

def plot_sonify_novelty_beats(fn_wav, fn_ann, title=''):

ann, label_keys = libfmp.c6.read_annotation_pos(fn_ann, label='onset', header=0)

df = pd.read_csv(fn_ann, sep=';', keep_default_na=False, header=None)

beats_sec = df.values

Fs = 22050

x, Fs = librosa.load(fn_wav, Fs)

x_duration = len(x)/Fs

nov, Fs_nov = libfmp.c6.compute_novelty_spectrum(x, Fs=Fs, N=2048, H=256, gamma=1, M=10, norm=1)

figsize=(8,1.5)

fig, ax, line = libfmp.b.plot_signal(nov, Fs_nov, color='k', figsize=figsize,

title=title)

libfmp.b.plot_annotation_line(ann, ax=ax, label_keys=label_keys,

nontime_axis=True, time_min=0, time_max=x_duration)

plt.show()

x_beats = librosa.clicks(beats_sec, sr=Fs, click_freq=1000, length=len(x))

ipd.display(ipd.Audio(x + x_beats, rate=Fs))

#print('Carlos Gardel: Por Una Cabeza')

#fn_ann = os.path.join('..', 'data', 'C6', 'FMP_C6_Audio_PorUnaCabeza_quarter.csv')

#fn_wav = os.path.join('..', 'data', 'C6', 'FMP_C6_Audio_PorUnaCabeza.wav')

#plot_sonify_novelty_beats(fn_wav, fn_ann)

title = 'Borodin: String Quartet No. 2, 3rd movement'

fn_ann = os.path.join('..', 'data', 'C6', 'FMP_C6_Audio_Borodin-sec39_RWC_quarter.csv')

fn_wav = os.path.join('..', 'data', 'C6', 'FMP_C6_Audio_Borodin-sec39_RWC.wav')

plot_sonify_novelty_beats(fn_wav, fn_ann, title)

title = 'Chopin: Op.68, No. 3'

fn_ann = os.path.join('..', 'data', 'C6', 'FMP_C6_Audio_Chopin.csv')

fn_wav = os.path.join('..', 'data', 'C6', 'FMP_C6_Audio_Chopin.wav')

plot_sonify_novelty_beats(fn_wav, fn_ann, title)

title = 'Fauré: Op.15'

fn_ann = os.path.join('..', 'data', 'C6', 'FMP_C6_Audio_Faure_Op015-01-sec0-12_SMD126.csv')

fn_wav = os.path.join('..', 'data', 'C6', 'FMP_C6_Audio_Faure_Op015-01-sec0-12_SMD126.wav')

plot_sonify_novelty_beats(fn_wav, fn_ann, title)

Tempogram Representations¶

In Fourier analysis, a (magnitude) spectrogram is a time–frequency representations of a given signal. A large value $\mathcal{Y}(t,\omega)$ of a spectrogram indicates that the signal contains at time instance $t$ a periodic component that corresponds to the frequency $\omega$. We now introduce a similar concept referred to as a tempogram, which indicates for each time instance the local relevance of a specific tempo for a given music recording. Mathematically, we model a tempogram as a function

\begin{equation} \mathcal{T}:\mathbb{R}\times \mathbb{R}_{>0}\to \mathbb{R}_{\geq 0} \end{equation}depending on a time parameter $t\in\mathbb{R}$ measured in seconds and a tempo parameter $\tau \in \mathbb{R}_{>0}$ measured in beats per minute (BPM). Intuitively, the value $\mathcal{T}(t,\tau)$ indicates the extent to which the signal contains a locally periodic pulse of a given tempo $\tau$ in a neighborhood of time instance $t$. Just as with spectrograms, one computes a tempogram in practice only on a discrete time–tempo grid. As before, we assume that the sampled time axis is given by $[1:N]$. To avoid boundary cases and to simplify the notation in the subsequent considerations, we extend this axis to $\mathbb{Z}$. Furthermore, let $\Theta\subset\mathbb{R}_{>0}$ be a finite set of tempi specified in $\mathrm{BPM}$. Then, a discrete tempogram is a function

\begin{equation} \mathcal{T}:\mathbb{Z}\times \Theta\to \mathbb{R}_{\geq 0}. \end{equation}Most approaches for deriving a tempogram representation from a given audio recording proceed in two steps.

- Based on the assumption that pulse positions usually go along with note onsets, the music signal is first converted into a novelty function. This function typically consists of impulse-like spikes, each indicating a note onset position.

- In the second step, the locally periodic behavior of the novelty function is analyzed.

To obtain a tempogram,one quantifies the periodic behavior for various periods $T>0$ (given in seconds) in a neighborhood of a given time instance. The rate $\omega=1/T$ (measured in $\mathrm{Hz}$) and the tempo $\tau$ (measured in $\mathrm{BPM}$) are related by

\begin{equation} \tau = 60 \cdot \omega. \end{equation}For example, a sequence of impulse-like spikes that are regularly spaced with period $T=0.5~\mathrm{sec}$ corresponds to a rate of $\omega=1/T=2~\mathrm{Hz}$ or a tempo of $\tau=120~\mathrm{BPM}$.

Pulse Levels¶

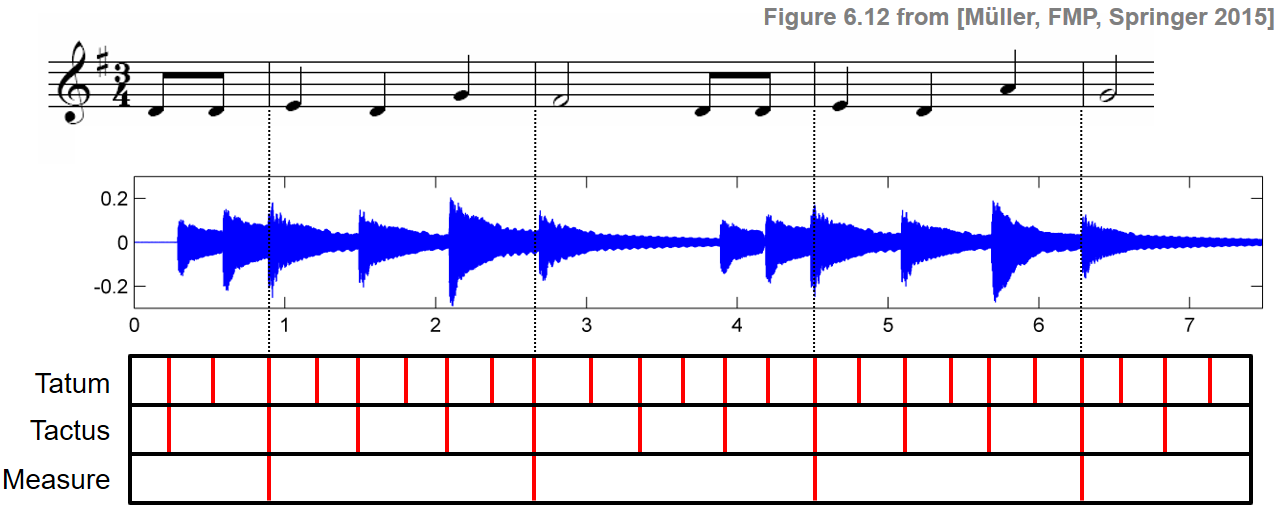

One major problem in determining the tempo of a music recording arises from the fact that pulses in music are often organized in complex hierarchies that represent the rhythm. In particular, there are various levels that are presumed to contribute to the human perception of tempo and beat. The tactus level typically corresponds to the quarter note level and often matches the foot tapping rate. Thinking at a larger musical scale, one may also perceive the tempo at the measure level, in particular when listening to fast music or to highly expressive music with strong rubato. Finally, one may also consider the tatum (temporal atom) level, which refers to the fastest repetition rate of musically meaningful accents occurring in the signal. The next example illustrates these notions using the song "Happy Birthday to you."

In the following code cell, we visualize manually annotated pulse positions for different pulse levels shown on top of a spectral-based novelty function. Furthermore, one can listen to a sonification of the pulse positions overlaid with the original music recordings.

title = 'Pulse on measure level'

fn_ann = os.path.join('..', 'data', 'C6', 'FMP_C6_Audio_HappyBirthday_measure.csv')

fn_wav = os.path.join('..', 'data', 'C6', 'FMP_C6_Audio_HappyBirthday.wav')

plot_sonify_novelty_beats(fn_wav, fn_ann, title)

title = 'Pulse on tactus level'

fn_ann = os.path.join('..', 'data', 'C6', 'FMP_C6_Audio_HappyBirthday_tactus.csv')

fn_wav = os.path.join('..', 'data', 'C6', 'FMP_C6_Audio_HappyBirthday.wav')

plot_sonify_novelty_beats(fn_wav, fn_ann, title)

title = 'Pulse on tatum level'

fn_ann = os.path.join('..', 'data', 'C6', 'FMP_C6_Audio_HappyBirthday_tatum.csv')

fn_wav = os.path.join('..', 'data', 'C6', 'FMP_C6_Audio_HappyBirthday.wav')

plot_sonify_novelty_beats(fn_wav, fn_ann, title)

Tempo Octave, Harmonic, and Subharmonic¶

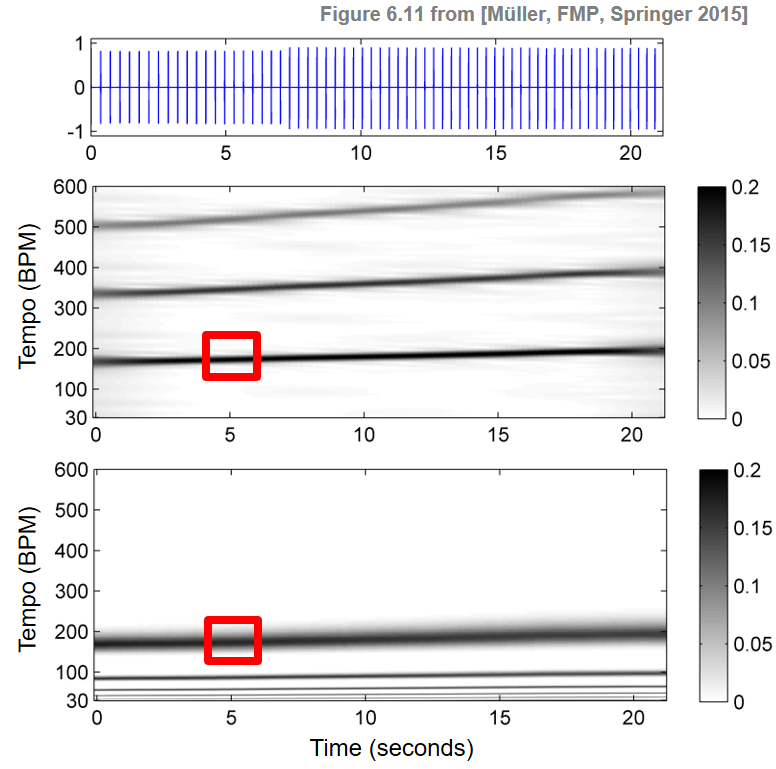

Often the tempo ambiguity that arises from the existence of different pulse levels is also reflected in a tempogram $\mathcal{T}$. Higher pulse levels often correspond to integer multiples $\tau,2\tau,3\tau,\ldots$ of a given tempo $\tau$. As with pitch, we call such integer multiples (tempo) harmonics of $\tau$. Furthermore, integer fractions $\tau,\tau/2,\tau/3,\ldots$ are referred to as (tempo) subharmonics of $\tau$. Analogous to the notion of an octave for musical pitches, the difference between two tempi with half or double the value is called a tempo octave.

The following example shows two different types of tempograms for a click track of increasing tempo (raising from $170$ to $200~\mathrm{BPM}$ over the course of $20~\mathrm{sec}$). The first tempogram emphasizes tempo harmonics, whereas the second tempogram emphasizes tempo subharmonics. The entry marked by the red rectangle indicates that the music signal has a dominant tempo of $\tau=180~\mathrm{BPM}$ around time position $t=5~\mathrm{sec}$.

In the following, we will study two conceptually different methods that are used to derive these two tempograms: Fourier-based tempograms that emphasize tempo harmonics and autocorrelation-based tempograms that emphasize tempo subharmonics.

Global Tempo¶

Assuming a more or less steady tempo, it suffices to determine one global tempo value for the entire recording. Such a value may be obtained by averaging the tempo values obtained from a frame-wise periodicity analysis. For example, based on a tempogram representation, one can average the tempo values over all time frames to obtain a function $\mathcal{T}_\mathrm{Average}:\Theta\to\mathbb{R}_{\geq 0}$ that only depends on $\tau\in\Theta$. Assuming that the relevant time positions lie in the interval $[1:N]$, one may define $\mathcal{T}_\mathrm{Average}$ by

\begin{equation} \label{eq:BeatTempo:TempoAna:AutoCor:TempoAv} \mathcal{T}_\mathrm{Average}(\tau) := \frac{1}{N}\sum_{n\in[1:N]} \mathcal{T}(n,\tau). \end{equation}The maximum

\begin{equation} \label{eq:BeatTempo:TempoAna:AutoCor:TempoAvMax} \hat{\tau} := \max\{\mathcal{T}_\mathrm{Average}(\tau)\,\mid\, \tau\in\Theta\} \end{equation}of this function then yields an estimate for the global tempo of the recording. Of course, more refined methods for estimating a single tempo value may be applied. For example, instead of using a simple average, one may apply median filtering, which is more robust to outliers and noise.

Further Notes¶

In the following notebooks, we introduce two conceptually different methods for computing tempogram representations: